Structuring Autoencoders for Sparsely Supervised Labeling

// Electronics and Electrotechnology // Information and Communication Technology // Measuring and Control Technology // Software

Ref-Nr: 16790

Abstract

The invention concerns a method for the cost-effective and time-efficient labeling and classification of data in neural networks, applicable in information and communication technology, e.g. in the fields of image and data processing, pattern recognition, speech recognition as well as in control engineering.background

The generation of labelled data is extremely cost-intensive and error-prone. Using Amazon Mechanical Turk, for example, requires accurate verification of label results, automated relabelling and filtering of useful results.

Innovation / Solution

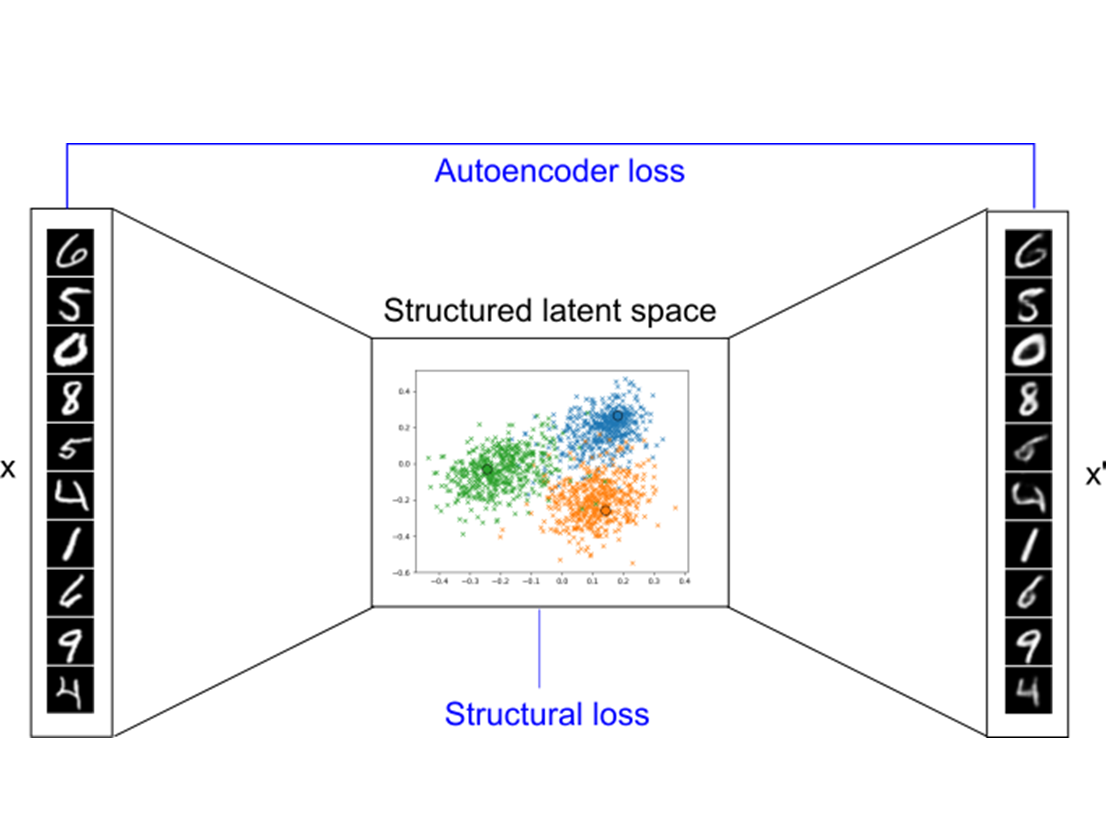

More important than the amount of data is the quality of this. Therefore, automated algorithms that can request which data to label are of particular economic interest. The invention "Structuring AutoEncoder" (SAE) is a solution for exactly this problem (among some other aspects). SAEs are neural networks that are trained with a small amount of data and optionally form a desired structure in latent space with predefined labels. There are two inventional loss functions which optimize the reconstruction error on the one hand and the structural loss of the data in the latent space on the other hand. This enables a semantically structured, low-dimensional representation of data.Benefits

Cost- and time-efficient labeling/classifying of data

Reducing the number of training cycles required

Optimization of car encoders e.g. with regard to classification options

fields of application

Image and data processing, pattern recognition, control engineering, speech recognition, machine and plant construction.You can close this window. You can find your search results in the previous window